We fine-tuned Qwen-Image-Edit on the brand-new Pico-Banana-400K dataset using our EigenTrain platform, introduced a Lightning option that produces edits in as few as 4 steps, and rounded out the stack with EigenInference (production-safe inference optimizations) and EigenDeploy (secure, autoscaling deployments for regulated enterprises). We’re also open-sourcing a LoRA branch that runs in Diffusers and DiffSynth-Studio, with a hosted demo for a much faster experience.

1) Pico-Banana-400K

Pico-Banana-400K is a large-scale, instruction-based image editing dataset built from real photos. It contains ~400K text–image–edit triplets spanning 35 edit operations across 8 semantic categories, with both single-turn and multi-turn supervision. The authors generate human-like edit instructions (e.g., via Gemini-2.5-Flash), produce edits (via Nano-Banana), and auto-screen quality (via Gemini-2.5-Pro) to ensure instruction faithfulness and content preservation. Source images come from Open Images.

2) EigenPlatform

EigenTrain

EigenTrain unifies SFT, offline RL, and online RL for training text LLMs and VLMs, and includes first-class workflows for multimodal image/video generation. It’s designed for teams that need to turn new datasets into high-quality models fast:

- Pipeline builders: Our pipeline builders orchestrate SFT, reward modeling, and PPO/GRPO end-to-end, with guardrails, managed checkpoints, and automatic resume to keep every run reproducible.

- One platform, all modalities: Train text LLMs, VLMs, and image/video generators on the same platform.

- Distillation tracks: Distill frontier models into compact specialists via task-aware teachers, low-rank adapters, and calibration passes that preserve quality while cutting latency and cost.

- Agent tuning, MCP-ready: Tune any agent model by plugging into MCP-compatible tool-execution frameworks to call external APIs or self-hosted functions, capture rich tool/state traces, and train with on-policy and off-policy loops for robust real-world behavior.

EigenInference

Production-safe inference optimizations—quantization, sparsity, distillation, routing, and more—to crush strict latency and cost targets across LLM, VLM, and multi-modal workloads.

- Quantization & sparsity: 4-bit/8-bit quantization plus structured/unstructured pruning to cut memory and accelerate throughput with tight quality bounds.

- Distillation & few-step diffusion: Distill generalists into compact specialists and enable lightning diffusion variants that sample in a handful of steps while preserving quality.

- Adaptive batching & speculative decoding: Dynamic batching and speculative decoding to boost tokens/sec and reduce tail latency; includes KV-cache optimization for long contexts.

- Routing & observability: Policy-based routing across model families and shards, with hot-path profiling and token-level tracing for SLO enforcement and rapid debugging.

EigenDeploy

Resilient deployments across cloud or your metal—choose serverless, on-demand, or dedicated serving.

- Scale & stability: Traffic-aware autoscaling, hot restarts on error bursts, multi-replica HA with safe rollbacks.

- Run anywhere: Managed in our cloud, VPC-peered, or on-prem/self-built GPU clusters across regions and continents.

- Operate with confidence: Deep observability, cost guardrails, quotas, and audit-ready access controls.

3) Eigen-Banana-Qwen-Image-Edit

We started from Qwen-Image-Edit—a strong, Apache-2.0 image-editing foundation that supports precise text editing and both appearance-preserving and semantic edits. Using EigenTrain, we fine-tuned it on Pico-Banana-400K to create Eigen-Banana-Qwen-Image-Edit.

Why Qwen-Image-Edit as the base?

- Proven general-purpose editing capabilities with accurate text editing.

- Solid integration in Diffusers (QwenImage pipelines), making it easy to adopt in existing stacks.

Lightning: 4-step generation

We also optimized a Lightning variant that generates high-quality edits in 4 steps—great for interactive tools and low-latency workloads. Our Lightning build follows the community’s Qwen-Image-Lightning approach (FlowMatch-style scheduler + LoRA distillation) and supports 4-step presets.

What this means for you

- Snappy UX: single-digit steps enable near-instant previews and rapid iteration.

- Lower cost: fewer steps = fewer FLOPs per edit.

- Quality preserved: careful distillation keeps structure and instruction faithfulness intact.

Open-source LoRA release

Eigen-Banana-Qwen-Image-Edit is a LoRA (Low-Rank Adaptation) checkpoint for the Qwen-Image-Edit model, optimized for fast, high-quality image editing with text prompts. This model enables efficient text-guided image transformations with reduced inference steps while maintaining excellent quality. Access model weights from here.

Use it in DiffSynth-Studio or try it in Diffusers (QwenImage / Qwen-Image-Edit pipelines).

For a much faster hosted version without any quality loss, visit our demo here.

4) Qualitative Comparison

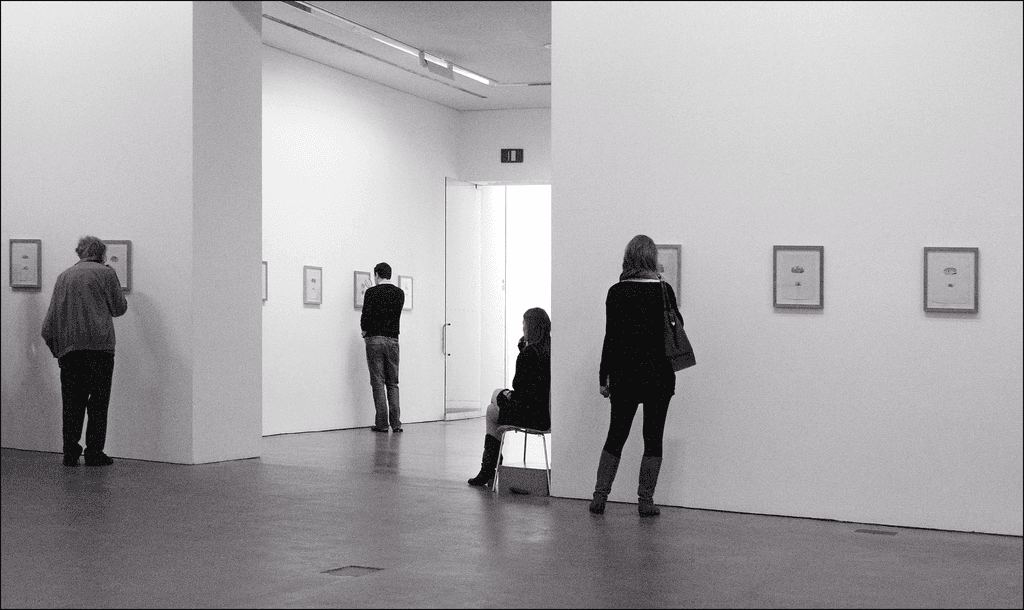

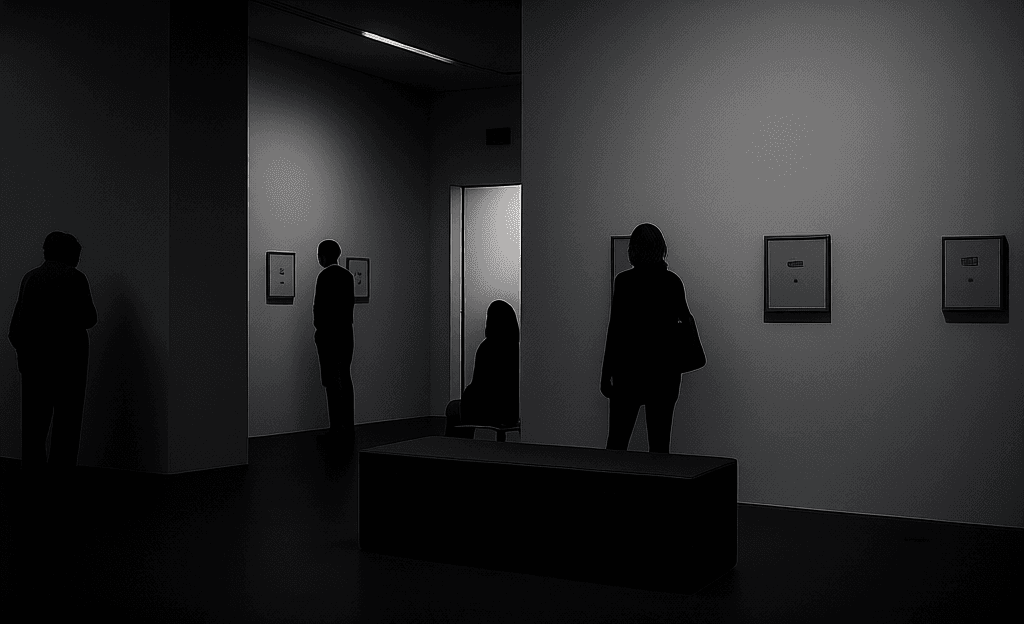

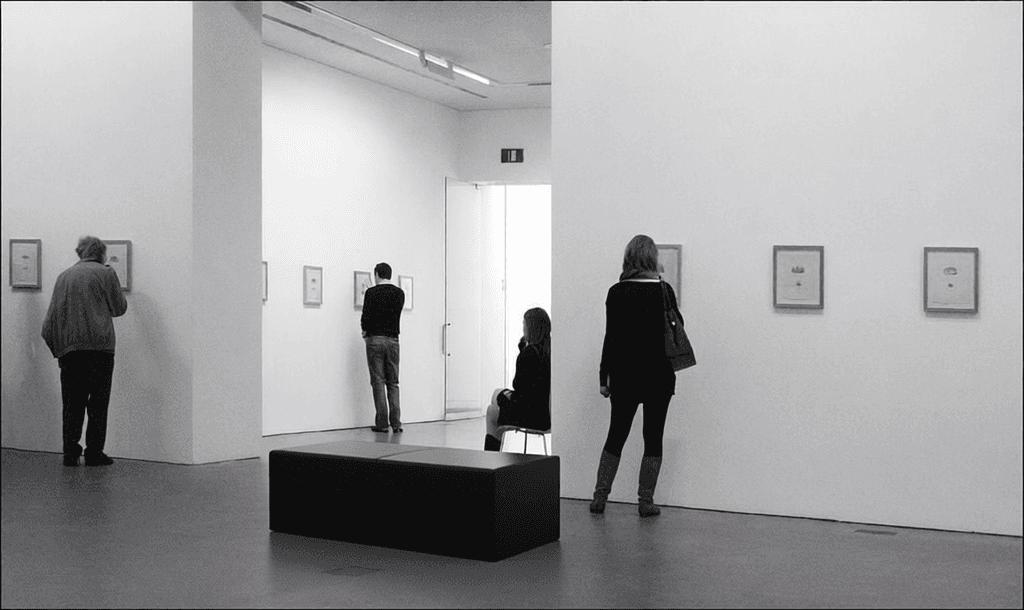

Example 1 - Add a new object to the scene

Prompt: Integrate a minimalist, dark-toned, rectangular gallery bench into the mid-ground, positioned slightly to the right of the central pillar and facing the right wall, ensuring its texture, lighting, and subtle shadows are consistent with the existing black and white aesthetic and diffused ambient light of the art gallery.

Qwen-Image-Edit

Eigen-Banana (⚡Lightning)

Example 2 - Add a film grain/filter

Prompt: Apply a vintage film aesthetic to the image, featuring a subtle desaturation of colors with a warm, golden-hour tone, introduce a fine and natural-looking film grain across the entire scene, gently reduce overall contrast for a softer appearance, and add a very faint, dark vignette to the edges to mimic an aged photographic print.

Qwen-Image-Edit

Eigen-Banana (⚡Lightning)

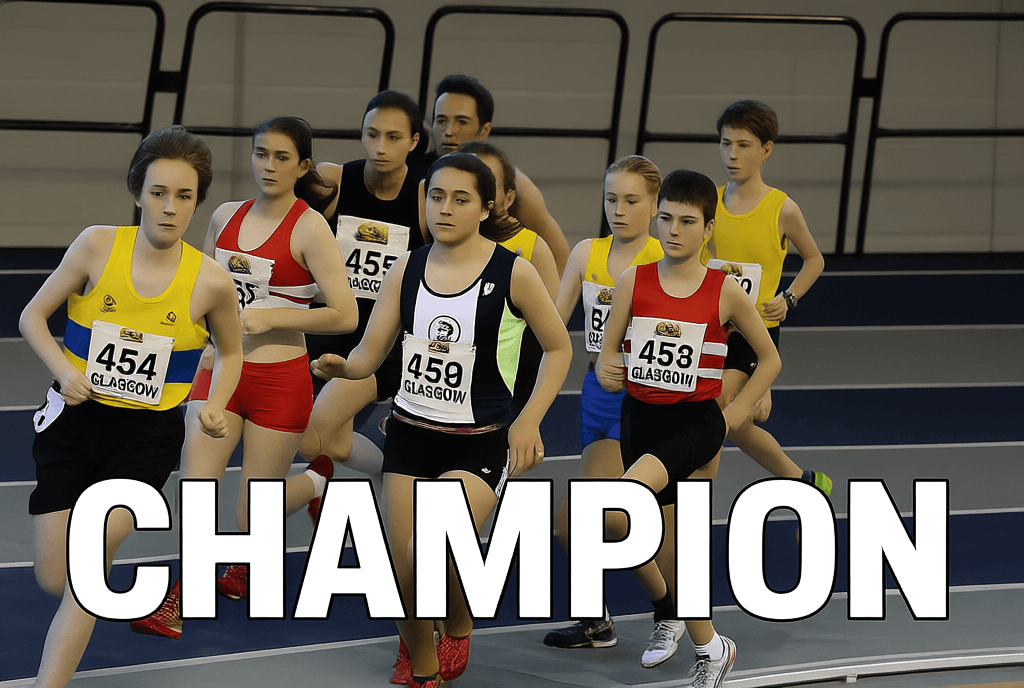

Example 3 - Add a new text

Prompt: Add the text "CHAMPION" in a bold, sans-serif font, horizontally aligned below the existing "GLASGOW" text on the race bib of the runner wearing number 454 (yellow singlet), ensuring the text color, lighting, and subtle fabric distortion match the existing elements on the bib.

Qwen-Image-Edit

Eigen-Banana (⚡Lightning)

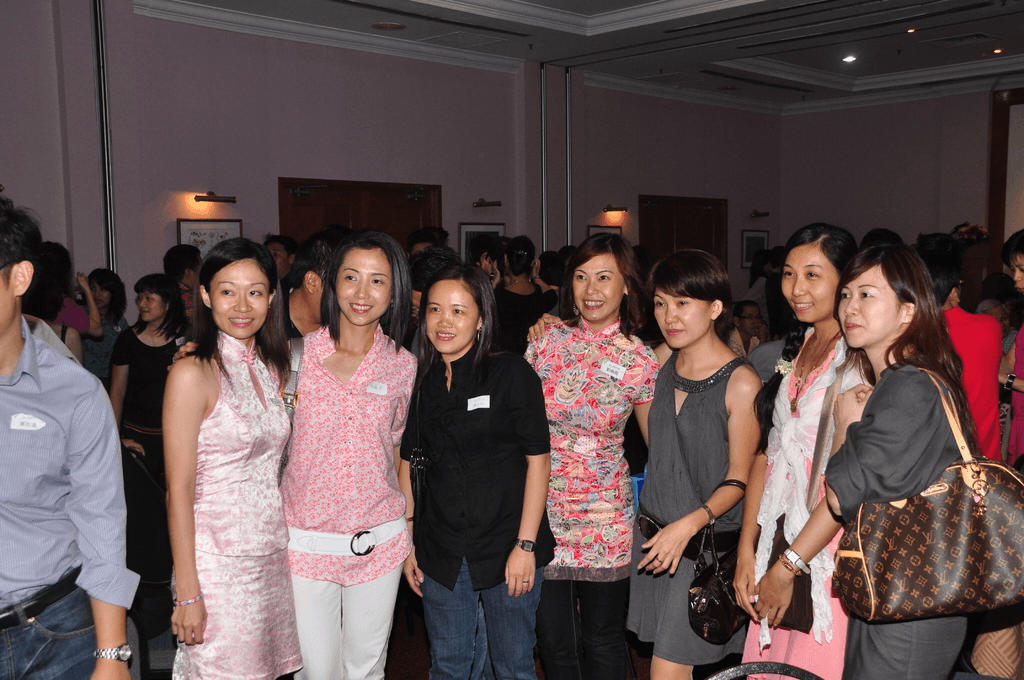

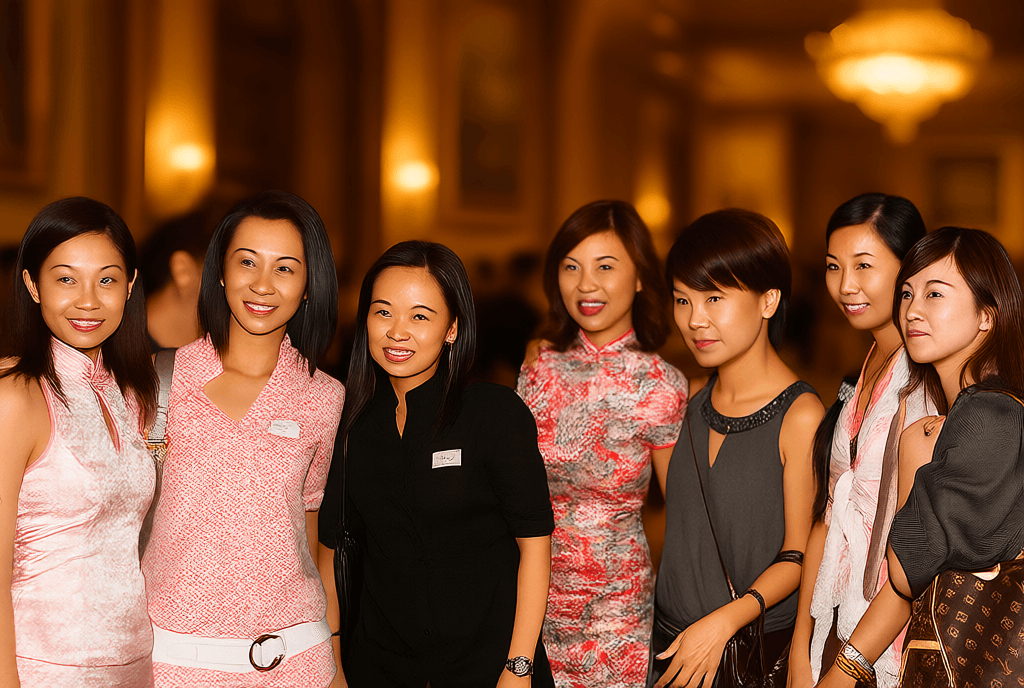

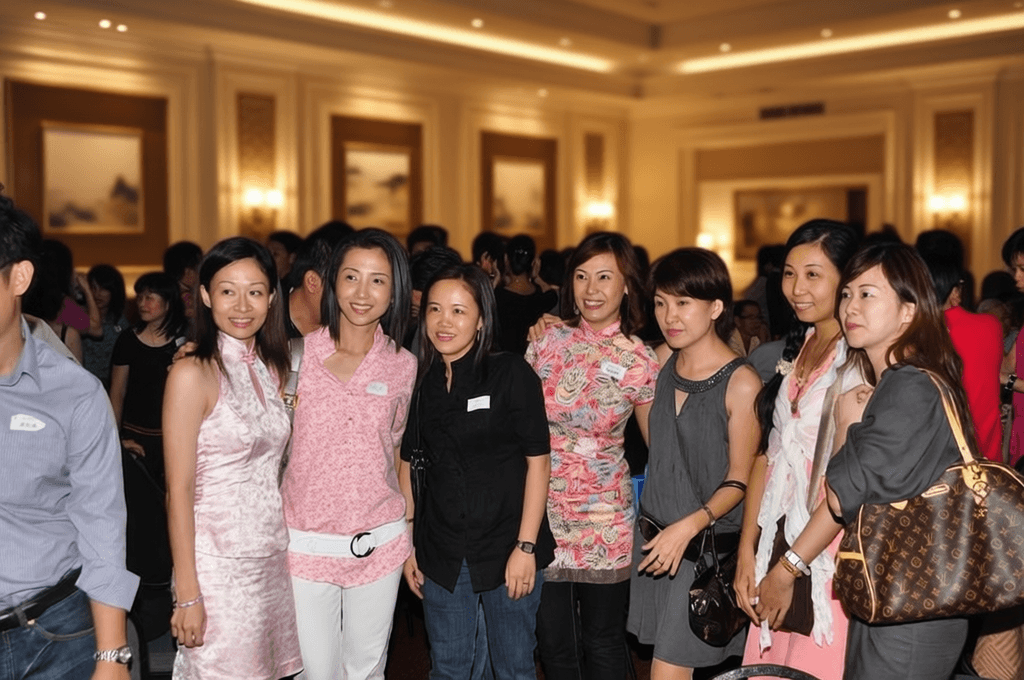

Example 4 - Add a new scene/background

Prompt: Replace the current plain wall background with a sophisticated, softly lit indoor event space, featuring warm golden ambient lighting, elegant architectural details such as decorative panels or subtle artwork, and a slightly blurred depth of field to keep the focus on the subjects while ensuring the new background's rich, muted tones complement their attire.

Qwen-Image-Edit

Eigen-Banana (⚡Lightning)

Example 5 - Modify expressions

Prompt: Adjust the subject's facial expression to a subtle, closed-mouth smile, ensuring natural skin folds and realistic lighting on the face, while maintaining the existing head posture and integrating seamlessly with the overall image context.

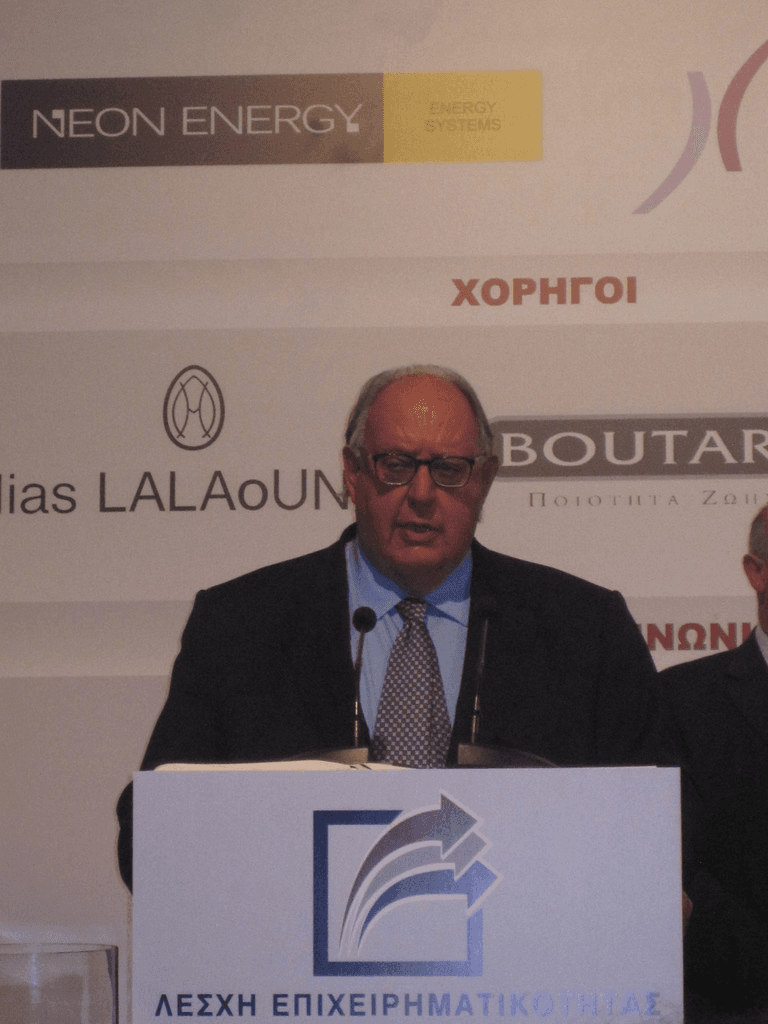

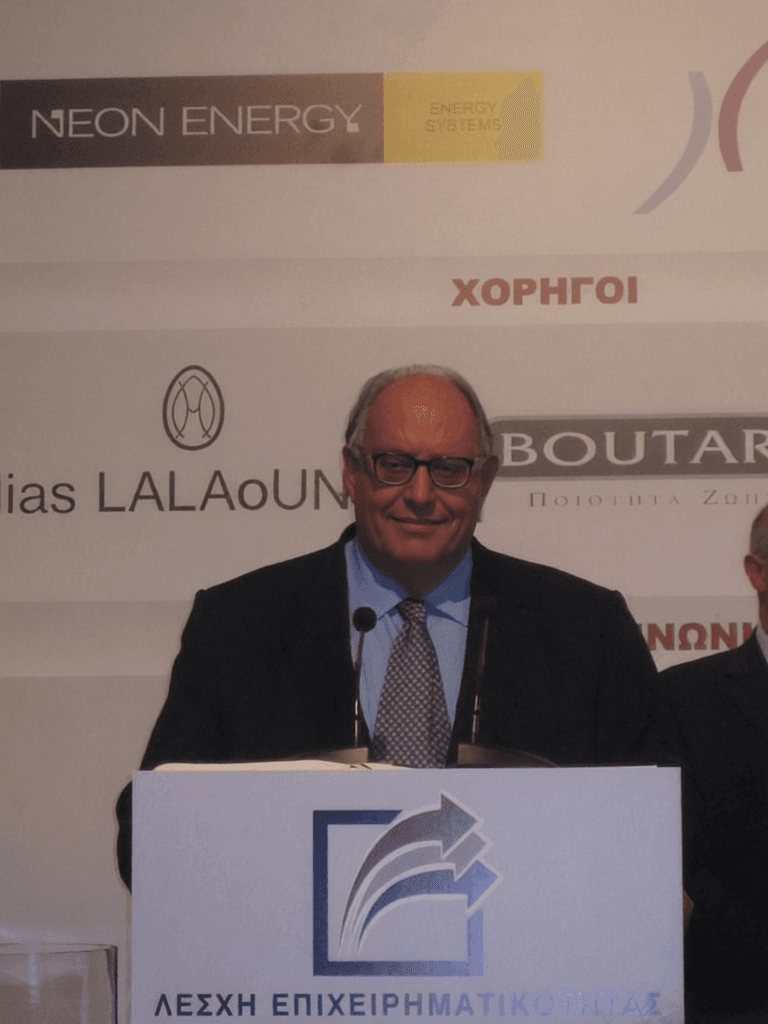

Qwen-Image-Edit

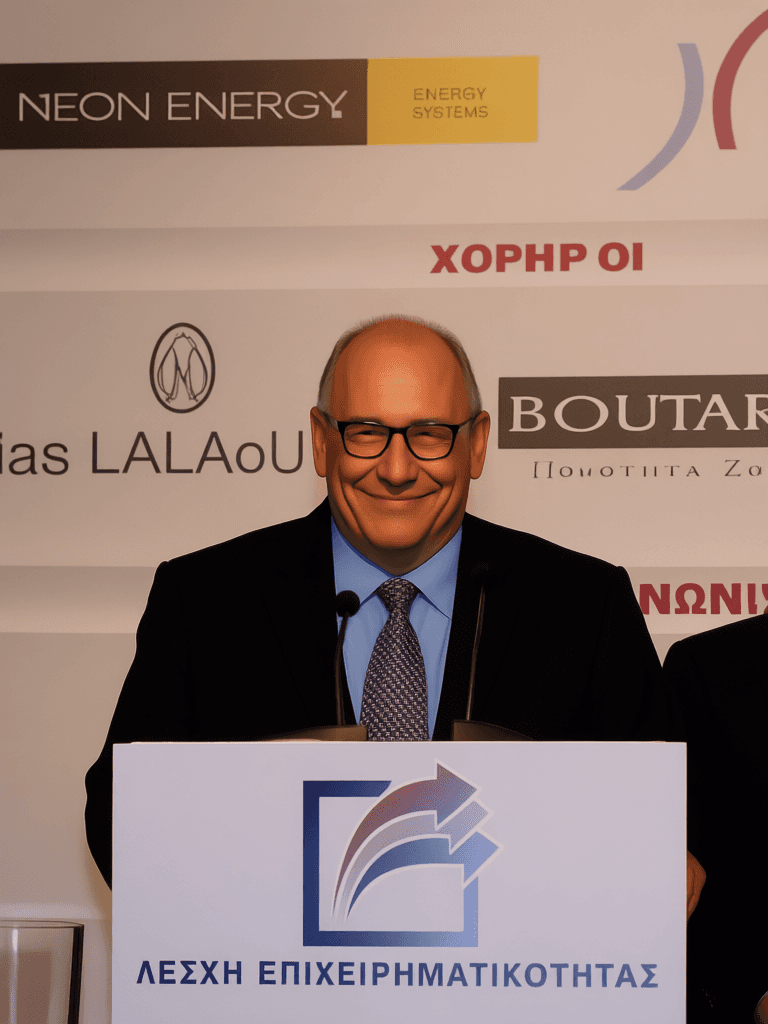

Eigen-Banana (⚡Lightning)

5) Acknowledgements

Huge thanks to the teams and communities that made this possible:

- Pico-Banana-400K authors and Apple for releasing a real-image, instruction-editing dataset. (GitHub)

- Qwen for the Qwen-Image-Edit foundation and tooling. (Hugging Face)

- LightX2V and contributors for Qwen-Image-Lightning few-step distillation work. (Hugging Face)

- The broader open-source ecosystem (DiffSynth-Studio, Diffusers, Open Images, etc.). (Hugging Face)

6) Further Reading

- Pico-Banana-400K — dataset overview & construction details. (arXiv)

- Qwen-Image-Edit (HF) — model card, quick-start, and examples. (Hugging Face)

- QwenImage pipeline (Diffusers docs) — API details for integration. (Hugging Face)

- DiffSynth-Studio docs & repo — engine and quick start. (DiffSynth-Studio)

- NVFP4 on Blackwell — NVIDIA’s low-precision inference overview. (NVIDIA Developer)

7) References

- Y. Qian et al. “Pico-Banana-400K: A Large-Scale Dataset for Text-Guided Image Editing,” arXiv:2510.19808 (Oct 2025). (arXiv)

- Pico-Banana-400K GitHub (dataset stats, download & license: CC BY-NC-ND 4.0). (GitHub)

- Qwen/Qwen-Image-Edit model card (Apache-2.0; semantic & appearance editing; precise text editing). (Hugging Face)

- Hugging Face Diffusers — QwenImage pipeline (integration docs). (Hugging Face)

- lightx2v/Qwen-Image-Lightning (FlowMatch-style scheduler; 8-step example; LoRA distillation). (Hugging Face)

- Qwen-Image-Edit-2509 Lightning 4-steps files (evidence of 4-step checkpoints). (Hugging Face)

About EigenTrain, EigenInference, EigenDeploy — Platform & Availability.

Bring instruction-guided image editing to your product—or adapt to a new domain—fast. EigenTrain turns your data into high-quality editors with efficient fine-tuning. EigenInference delivers production-safe inference optimizations—quantization, sparsity, distillation, routing, and more—to hit strict latency and cost targets across LLM, VLM, and multi-modal workloads. EigenDeploy provides secure, autoscaling deployments across cloud or on-prem with high availability, observability, and cost control for regulated enterprises.

We’ll work with your team to scope fit and deployment options aligned to your dataset licensing and compliance needs. If you are interested in EigenTrain, EigenInference, EigenDeploy and dedicated serving across LLM, VLM, and multi-modal (see here) with SLOs, observability, and on-prem/air-gapped options, contact Eigen AI.

The open-sourced LoRA branch is plug-and-play in Diffusers (QwenImage pipelines) and DiffSynth-Studio, so you can try it locally right away—or visit here to use our hosted demo for maximum speed.